Defination

desired output $\boldsymbol{d}\in \mathbb{R}^{1\times 1}$

1st laywer input $\boldsymbol{x}^{(1)}\in \mathbb{R}^{n\times 1}$

1st laywer weight $\boldsymbol{W}^{(1)}\in \mathbb{R}^{m\times n}$

1st laywer bias $\boldsymbol{b}^{(1)}\in \mathbb{R}^{m\times 1}$

1st laywer output $\boldsymbol{z}^{(1)}\in \mathbb{R}^{m\times 1}$

2st laywer input $\boldsymbol{x}^{(2)}\in \mathbb{R}^{m\times 1}$

2nd laywer weight $\boldsymbol{W}^{(2)}\in \mathbb{R}^{1\times m}$

2nd laywer bias $\boldsymbol{b}^{(2)}\in \mathbb{R}^{1\times 1}$

2st laywer output $\boldsymbol{z}^{(2)}\in \mathbb{R}^{1\times 1}$

output $\boldsymbol{y} \in \mathbb{R}^{1\times 1}$

Activation function:

sigmod

RELU

forward propagation

Define Loss function$J(\boldsymbol{\cdot})$

Take the derivative of the loss function

Back propagation

Learning step $\mu$

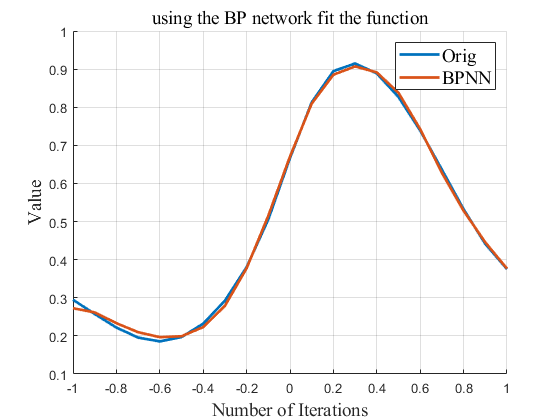

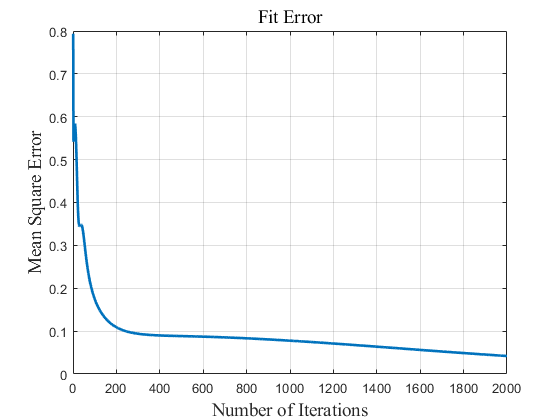

Simulation